LunarFM - Learning the Language of the Moon

For decades, lunar missions have collected extraordinary datasets: optical imagery, thermal maps, altimetry, radar backscatter, gravity anomalies, each revealing a different facet of the Moon.

But none of these datasets speak the same language.

At Lunarlab this summer, researchers built something groundbreaking: LunarFM, a multimodal lunar foundation model that learns directly from decades of observations and fuses them into a single representation of the Moon.

Read the full blog below:

Can we talk to the Moon?

Learning the language of the lunar surface through the power of AI

At FDL’s Lunarlab, our team built something that only a few years ago would have sounded like science fiction: LunarFM, a multimodal lunar foundation model that learns directly from decades of lunar orbital observations and turns them into a unified representation or “language” of the Moon. LunarFM makes it possible for researchers and mission planners to interrogate, extend, and build upon this shared representation.

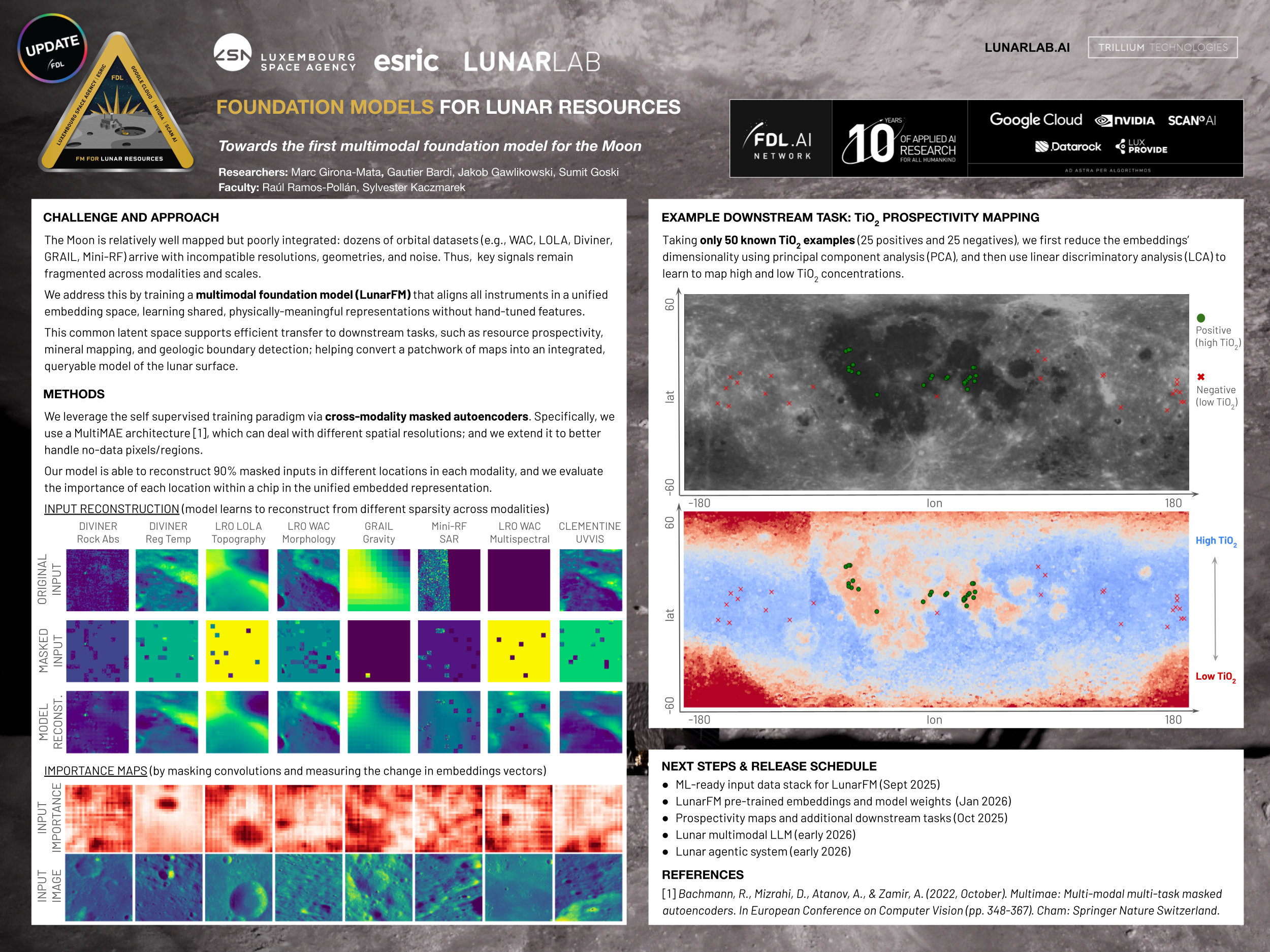

For many decades, lunar missions have collected an extraordinary spectrum of datasets spanning optical imagery, laser altimetry, thermal measurements, radar backscatter, and global gravity. Each dataset reveals a different facet of the Moon’s surface and geology. But each comes with its own resolutions, noise, artefacts, and coverage gaps. Leveraging these datasets has traditionally required painstaking, mission-by-mission preprocessing, making it very difficult for them to be combined. At a moment when lunar exploration is accelerating, the field lacks a single system capable of learning from all these modalities at once and capturing their shared structure in a unified space.

This is where foundation models mark a genuine shift in how we work with planetary data. Rather than being taught or supervised through labelled examples, a foundation model learns in a self-supervised way: it predicts masked or missing parts of its own input. This allows it to extract deep, latent structure directly from data, discovering relationships and correlations that might never appear in a setting where the AI learns to perform a single task in a supervised way. In other words, foundation models turn vast unlabelled archives (like the lunar record) into an accessible asset.

LunarFM applies this paradigm shift by fusing 18 lunar data layers via a multimodal encoder model that yields a compact vector representation for each half-degree “chip” of the Moon. These vectors, or embeddings, capture the essential cross-modal relationships: from how thermal behaviour interacts with topography, to how radar roughness aligns with rock abundance, to how gravity anomalies correlate with geologic units. The result is a single, coherent representation of the lunar surface.

With this unified embedding space, a wide range of downstream tasks becomes dramatically more efficient. Models built on top of LunarFM can classify geologic units, estimate mineral concentrations, or retrieve regions with similar geophysical characteristics; and critically, these models achieve strong performance with only a small number of labelled examples to learn from. This few-shot learning capability is especially important in lunar science, where high-quality in-situ measurements are rare. LunarFM concentrates the learning up front, allowing later tasks to succeed with limited data while maintaining scientific rigour.

To make these capabilities accessible, we’ve also developed Agent Luna, a lightweight agentic interface built on top of LunarFM’s embeddings. Users can pose questions in natural language (e.g., “find areas similar to the Chang’e-5 landing site”, “locate regions with thermal behaviour like Mare Tranquillitatis”) and Agent Luna serves as a conversational gateway into the model, enabling rapid discovery without requiring deep expertise in every underlying dataset.

As part of Lunarlab 2025, we are openly releasing a full ecosystem: the LunarFM embeddings, the complete training codebase, Agent Luna, an AI-ready multimodal lunar data stack, and a benchmark suite of downstream tasks to support fair, reproducible model comparisons. By lowering technical barriers and providing standardised evaluation benchmarks, our goal is to accelerate progress across lunar science, resource prospecting, and mission planning.

We’re convinced that this unified foundation has the potential to transform how we explore the Moon, shifting from fragmented datasets and manual pipelines to a shared, data-driven representation that brings coherence, speed, and novel discovery pathways to lunar exploration.

- Written by Marc Girona-Mata & Jakob Gawlikowski, FDL Lunarlab researchers

The entire ecosystem, embeddings, data stack, codebase, and benchmarks is being openly released as part of Lunarlab 2025.

A foundation for the next era of lunar exploration, enabling science, resource prospecting, and mission planning to move with new speed and coherence.