Foundation models for science

Potentially represent a paradigm shift in machine learning from single-purpose, supervised machine learning pipelines, to general purpose, self-supervised models running at enormous scales.

Foundation models can:

act as a queryable knowledge store of large volumes of data

democratize the use of AI: enabling faster roll-out of capabilities such as segmentation, classification, object detection, change detection and regression - accessible even to people without coding skills

generalize well: maintain high performance across distinct geographic areas or between time-periods, and

be generative: producing predictions, synthetic data and model phenomena.

Foundation models translate the important information from multiple sensors and simulations into lower-dimensional ‘embeddings’ that are much easier to query, or use to generate new insight-level data, or directly answer questions.

However the journey from raw-data to embeddings requires trade-offs and therefore the way these models are built requires science-led thinking on how to make them genuinely useful tools for discovery and analysis.

Trillium’s Foundation Models and tools:

SDO-FM (in partnership with NASA and Google Cloud) is a multi-model foundation model of our Sun that aggregates solar magnetic data and multi-band images of the Sun’s atmosphere into the embedding space.

SDO-FM uses the SDOML V2 analysis ready data product.

An overview of SDOML V2 can be viewed here:

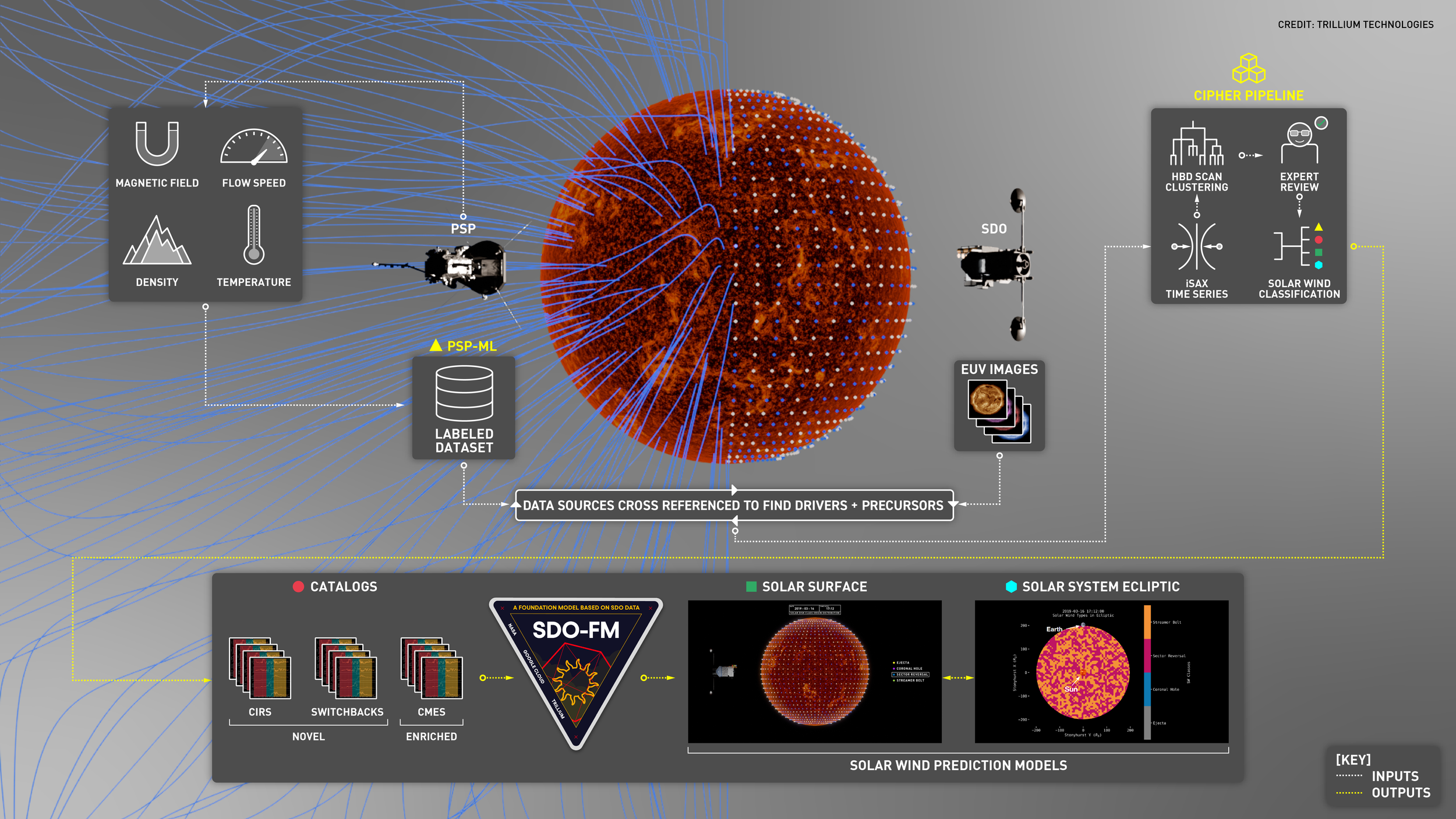

FDL Heliolab’s Decoding Solar Wind Structure pipeline uses SDOFM to help characterize the solar wind. The first time a scientific foundation model of the Sun has been used in a novel downstream application.

SAR-FM (in partnership with the LSA, Lux Provide and ESA) is a foundation model trained using synthetic aperture radar (SAR) data to generalize across geographic regions, for a broad range of downstream applications.

SAR-FM won best paper at the Climatechange.ai workshop at NeurIPS 2023. The paper can be viewed here: https://www.climatechange.ai/papers/neurips2023/76

Surya (in partnership with NASA, SwRI and IBM) is a 360-million-parameter Heliophysics foundation model trained on more than 200 TB of solar data collected over nine years by the Solar Dynamics Observatory. Leveraging advances in AI to analyze vast solar datasets, improving our understanding of solar eruptions and enabling more accurate space weather forecasts that protect satellites, power grids, and communication systems.

An overview of Surya and the upcoming workshop can be viewed here: http://heliofm.org/

LunarFM (in partnership with LSA and ESRIC) is a multi-model foundation model for the Moon that integrates 18 distinct data layers from multiple orbital missions, including NASA’s Lunar Reconnaissance Orbiter (LRO), GRAIL, and Clementine, into a unified, multimodal architecture.

For a full overview of LunarFM including the release schedule and technical briefing, these can be viewed here: https://lunarlab.ai/a-lunar-fm.

Read more about situational intelligence for the Moon here: https://trillium.tech/impact-section/lunar-systems-intelligence-talking-to-the-moon

MAESTRO (Multi-domain Assessment of Embeddings for Science Results and Outcomes)

FDL’s MAESTRO probes foundation models for efficacy based on specific performance criteria, employing adaptive querying to continuously evaluate and select embeddings aligned with the task.

SHRUG-FM (in partnership with ESA Earth Systems Lab eslab.ai) is a foundation model developed by the Foundation Models in Extreme Environments 2025 team. A framework to help foundation models flag when they may fail. This framework addresses two primary types of uncertainty, i.e., “not knowing because of the data” and “not knowing because of the model”.

Read more about the team’s research outcomes here: https://trillium.tech/impact-section/can-we-know-where-when-and-why-our-ai-earth-models-fail